A group of Google researchers has published a study revealing how AI is destroying the internet by spreading false information, which is almost ironic given the company’s involvement in the rise of the technology. The accessibility and the literary or visual quality of AI-generated content have paved the way for new forms of abuse or facilitated already widespread practices, further blurring the line between truth and falsehood.

Since its deployment in 2022, generative AI has offered a host of opportunities to accelerate the development of many fields. AI tools now have high capabilities ranging from complex audiovisual analysis (through natural language understanding) to mathematical reasoning and the generation of realistic images. This versatility has allowed the technology to be integrated into critical sectors such as healthcare, public services, and scientific research.

However, as the technology develops, the risks of misuse become increasingly concerning. Among these uses is the spread of disinformation, which now floods the internet. A recent analysis by Google has shown that AI is currently the main source of online image-based misinformation. The phenomenon is exacerbated by the increased accessibility of the tool, allowing anyone to generate any content with minimal technical expertise.

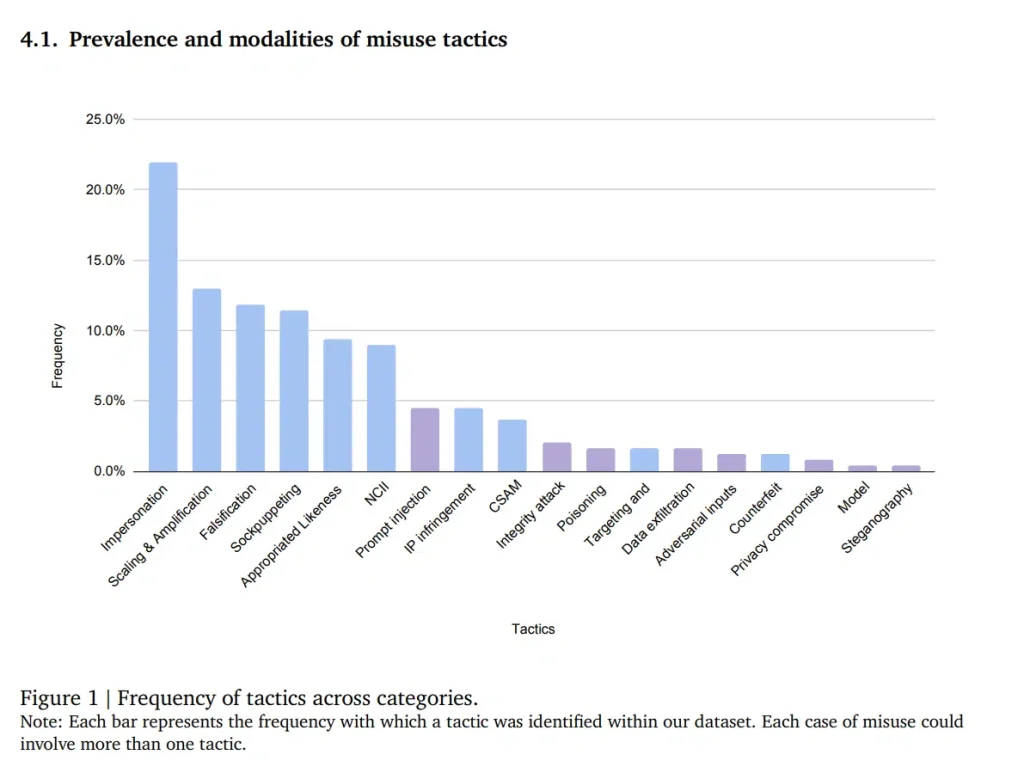

Each bar represents the frequency with which a tactic was identified in the dataset. Each case of misuse could involve more than one tactic. Image: Nahema Marchal.

However, while previous studies provide valuable information on the threats associated with AI misuse, they do not precisely indicate the different strategies that could be used for this purpose. In other words, we don’t know what tactics are being used by malicious users to spread false information. As the technology becomes more powerful, it is essential to understand how misuse manifests itself.

The new study, conducted by a Google team, sheds light on the different strategies used to spread AI-generated false information on the internet. “Through this analysis, we highlight the main and new patterns of misuse, including potential motivations, strategies, and how attackers exploit and abuse system capabilities across modalities (e.g., images, text, audio, video),” the researchers explain in their document, pre-published on the arXiv platform.

Abusive Uses That Don’t Require Advanced Technical Expertise

The researchers analyzed 200 media reports of AI misuse cases between January 2023 and March 2024. Based on this analysis, key trends underlying these uses were identified, including how and why users use the tool in an uncontrolled environment (i.e., in real life). The analysis included both image, text, audio, and video content.

The researchers found that the most frequent cases of abuse are fake images of people and falsification of evidence. Most of this fake content is reportedly spread with the apparent intention of influencing public opinion, facilitating fraudulent activities (or scams), and generating profit. On the other hand, the majority of cases (9 out of 10) do not require advanced technical expertise but rely more on the (easily exploitable) capabilities of the tools.

Interestingly, the abuses are not explicitly malicious but are still potentially harmful. “The increased sophistication, availability, and accessibility of generative AI tools seem to introduce new forms of lower-level abuse that are neither overtly malicious nor explicitly violate these tools’ terms of use but still have concerning ethical ramifications,” the experts indicate.

This finding suggests that although most AI tools have ethically compliant safety conditions, users find ways to circumvent them through cleverly formulated prompts. According to the team, this is a new emerging form of communication aimed at blurring the boundaries between authentic information and false information. The latter mainly concerns political awareness and self-promotion. This risks increasing public distrust of digital information while overloading users with verification tasks.

On the other hand, users can also bypass these security measures in another way. If it is not possible to enter a prompt explicitly containing the name of a celebrity, for example, the user can download their photo from their search engine and then modify it as they wish by inserting it as a reference in an AI tool.

However, the use of data solely from the media limits the scope of the study, the researchers noted. Indeed, these organizations generally focus only on specific information likely to interest their target audience, which can introduce biases in the analysis. Moreover, the document strangely does not mention any cases of misuse of AI tools developed by Google…

Nevertheless, the results offer a glimpse of the extent of the technology’s impact on the quality of digital information. According to the researchers, this underscores the need for a multi-stakeholder approach to mitigate the risks of malicious use of the technology.