One of the most outstanding achievements of the human brain is its ability to learn. Neuromorphic computing attempts to transfer this fundamental brain function to machines. In this way, these computers learn in a similar way to us. But how does neuromorphic computing work? And what are the advantages of such AI systems modeled on the brain?

From the medieval Golem to the Terminator, from Metropolis to “Wall-E,” understanding the human brain, harnessing its properties, and endowing inanimate matter with human capabilities is a frequently addressed, usually threatening, often useful, but always fascinating topic. But how realistic are these ideas in today’s world? And what possibilities do we have at all to explore and imitate such a complex system as the brain?

Researchers are trying to do just that: they are working on constructing computers modeled on the brain. These neuromorphic systems also learn similarly to us at the level of their function and structure. Initial successes have already been achieved.

The brain as a model

Only at first glance does the brain appear to be a grateful research object: it is a manageable size, and as long as scientists are content with mouse brains, there is no shortage of material to study. But the true conditions for brain researchers look different.

With more than 100 billion nerve cells, the brain is the most complex organ in the human body; only in a living organism can it be observed at work. One of its most essential characteristics quickly becomes clear: the whole is more than the sum of its parts. The brain is not just a collection of tens of billions of nerve cells; it is the complex interconnections between the nerve cells that allow us to think and learn.

20,000 genes for the brain

At the beginning of development, the brain emerges from a single cell. So there must be rules that govern how the nerve cells interact during embryonic development. According to current knowledge, these rules can only be stored in our genetic material. It is assumed that about one-third of the approximately 20,000 genes in the human genome are required for brain development; this alone indicates the extraordinary complexity of the organ.

So how can we approach this extraordinary biological complexity with the goal of having machines mimic thought processes, i.e., create “artificial intelligence” (AI)?

Artificial intelligence is already producing some impressive results, such as the victories of machines over the best human chess players. However, not only traditional board games such as chess or Go, but also modern computer-based real-time strategy games can be successfully contested by machines. Researchers demonstrated this using the online game “Starcraft,” among others.

AI systems have also already mastered poker, including bluffs. AI systems can steer vehicles through heavy traffic with virtually no accidents or identify people in the live video data of thousands of surveillance cameras. These are all examples of machine learning achievements that already exist today.

Principles adopted from nature

What helped artificial intelligence achieve this breakthrough was the adoption of principles from nature. These include the concept of the multilayer nerve network, the imitation of which makes it possible to approximate even the most complicated relationships. It also includes the observation that the calculations must be closely linked to the necessary memory; otherwise, the transport of the data becomes a bottleneck.

Seen in this light, the progress of artificial intelligence is thus based primarily on the imitation of biomorphic design principles.

How does neuromorphic computing work?

When the first scientific foundations for the machine learning methods currently in use were developed in the 1950s, people had only rough ideas about how learning in the brain worked at the neuron level. The algorithms that resulted are undoubtedly very powerful, but it is equally undeniable that these procedures do not occur in nature in the way they are implemented today.

Learning with weaknesses

One could speculate that many of the limitations of artificial intelligence today are based on this shortcoming. For example, a typical weakness of AI systems is their dependence on a vast amount of learning examples; another weakness is their lack of ability to abstract or generalize correctly. This can lead, for example, to AI systems developing “biases.”

Previous AI systems require enormous amounts of data to learn.

The lack of embedding in a continuous time sequence is also a deficit in current AI systems. But only then can learning, adaptation to the environment, and action be closely interwoven, determined, and coordinated by a common internal state. Only when machines possess these capabilities will it be possible for them to independently take on complex tasks in a natural environment.

Taking a cue from reality

How can these weaknesses be addressed and solved? Scientists try to rely on “neuromorphic computing,” a research direction whose basic assumption is that one only needs to study the natural model closely enough and understand the mechanisms of nature well enough to obtain answers.

The goal of neuromorphic computing is to transfer complete knowledge about the function of the natural nervous system to artificial neural systems; such a maximally biologically inspired artificial intelligence should ideally show superior results.

However, there are also good reasons why other AI researchers rely more on conventional methods instead of incorporating the knowledge of neuroscience. One of these reasons is the complex way nerve cells communicate with each other in nature: Each individual nerve cell makes contact with about a thousand, and some even millions, of other cells. If you wanted to reproduce this natural behavior with computer systems, you would have to send at least a thousand messages for each signal from each nerve cell and distribute them to the corresponding recipient cells.

Flexible connections

To make matters worse, the natural connections between nerve cells are not statically fixed but are constantly changing. Every day, about 10 percent of all neuronal connections in our brains are broken and replaced by new ones. Many external conditions determine which connections are dissolved and which are weakened or strengthened; we only have a rudimentary understanding of these conditions.

What we currently know, however, is that the signals of complete populations of nerve cells form spatial and temporal patterns and that the targeted rewiring of connections is learned. With this in mind, then, the broader question is: How can neuromorphic computation help us understand the mechanisms of learning and the assembly, disassembly, and reassembly of neuronal connections?

Why neuromorphic computing has a future

Some scientists simply consider neuromorphic computing to be a redundant path. With the steady increase in the power of mainframe computers, one of their arguments goes, the deficits described would disappear by themselves. The last decade, however, has shown that the expectations placed on mainframes have far exceeded what is actually technically possible.

The miniaturization of electronics, the basis of our computer technology, has slowed down considerably; in expert circles, the current discussion is no longer whether miniaturization will ever come to an end but when it will. For some time now, the energy consumption of circuits has also not been decreasing as fast as would be necessary if we wanted to match the performance increases of the past decades.

Artificial synapses on a silicon chip

Neuromorphic computing appears to be a pioneering approach to realizing biologically inspired artificial intelligence. Neuromorphic computing is about transferring the currently known biological structures of the nervous system as directly as possible to electronic circuits.

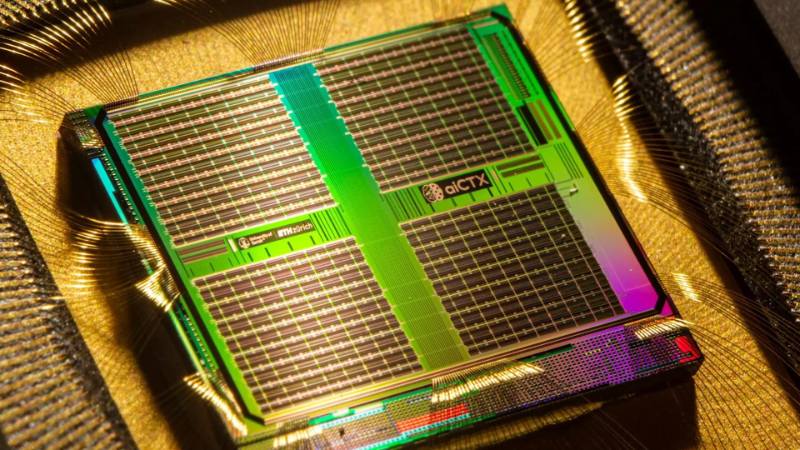

For example, scientists have succeeded in reproducing individual neurons together with their synapses—the contact points between nerve cells at which impulses are transmitted—as microelectronic circuits on silicon chips. These circuits have as many properties as possible in common with their natural counterparts; the physical model incorporates all the biological knowledge available at the current state of research. Other research teams have constructed artificial synapses based on magnetic circuits or photonic devices.

The limitations of what is currently feasible are partly imposed by neuroscience, partly by microelectronics: Microelectronics, for example, does not allow the nerve cell circuits responsible for learning to be reproduced in their full complexity, but it does make it possible not only to imitate the speed of natural processes but even to accelerate them significantly.

Computing with the hybrid plasticity model

Neuromorphic systems are at their best when it comes to learning. The findings that neuroscientists have gained in researching the brain’s ability to learn and the interconnection of neurons can be directly transferred to electronic models and tested.

A first model

The new “hybrid plasticity model” incorporates insights from neuroscience, electronics, and computer science in equal measure. For each possible connection between electronic neurons, the plasticity model holds a circuit ready to measure the signal flow. A conventional computer system could never perform this task nearly as efficiently and compactly. All signals must be monitored simultaneously, a task that nature easily masters with quadrillions of synapses firing simultaneously.

Compared to the natural model, our current electronic systems are only modest miniature versions. In the future, neuromorphic systems with up to a trillion connections will be possible. This would make it possible to test the learning of complex functions, such as the movements of humanoid robots.

The crucial factor here is that the parallel measurements of all signal flow between nerve cells must be processed by a special computer core within the same microchip. Only then can the computer core quickly and directly evaluate the measurement results for all signal flows without having to exchange information over distances of more than a few millimeters.

Fast learning even with simple rules

Because the hybrid plasticity model is a freely programmable microprocessor, scientists can determine the rules according to which the connections between the nerve cells are to be changed.

Experiments have shown that even relatively simple rules lead to stable learning results and efficient use of existing connections in a short time if they take into account the different temporal and spatial structures in the signals according to their biological models.

This learning is “hybrid” in the sense of a mixture of a physical and a virtual replica of nature. Mathematics and engineering, two fundamental cultural techniques of humans, are needed to get one step closer to understanding one of the most fundamental abilities of animate nature—the principles of learning of nervous systems. It is precisely this ability to learn that makes the phenomenon of “culture” possible in the first place.

Bibliography

- On-chip photonic synapse, Science Advances, 2017; doi: 10.1126/sciadv.1700160

- Ultralow power artificial synapses, Science Advances, 2018; doi: 10.1126/sciadv.1701329)

- Grandmaster level in StarCraft II using multi-agent reinforcement learning, Nature, 2019; doi: 10.1038/s41586-019-1724-z